Data Integration: Definition

Data integration is aggregating data from several sources into one system. Any company that wishes to guarantee that its data is consistent, easily available, and correct must first take this essential step. Dealing with this data integration concept, breaking down data silos is a crucial first step. Preventing this type of data segmentation and uniting fragmented information would help a company to have all of its data in a central location and in a format that systems and people can utilize to extract efficient, actionable business intelligence insights.

Working with IBM i data sources, for instance, you could wish to concentrate your data into a data warehouse. Although this isn’t accurate, one prevalent belief concerning IBM i integrations is that IBM i is rigid, so integration is challenging. Data integration lets you enable several business processes to access and combine IBM i and other business-critical data into their operations.

Using an EDI tool that lets applications access your unified data is another fundamental element of this concept of data integration. By loading data into a data warehouse, you enable many applications to access and use it once it is there.

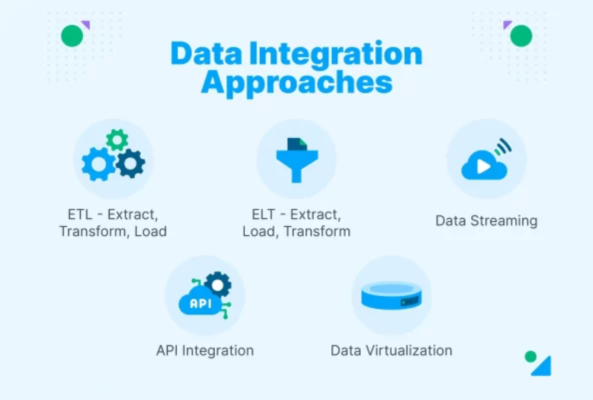

Five Approaches for Data Integration

The following five categories define different methods of data integration:

-

ETL, or Extract, Transform, Load: ETL is the process by which raw data is extracted from the source application, then transformed, then loaded into the target system. The change takes place in a staging area; once the change is finished, the data is imported into a target repository, like a data warehouse. This speeds up and increases the accuracy of analysis conducted by the target system. Smaller datasets needing complex transformations are ideal for ETL.

- Information comes from source systems.

- Changed in line with set criteria.

- Stashed into your intended system.

-

ELT—Extract, Load, Transform: In an ELT system, the data loads into the target system—which can be a cloud data lake or warehouse—then undergoes transformation within the ELT system. Large datasets work better for this since the loading procedure usually proceeds faster.

- Data comes out of source systems.

- Put into your intended system loaded.

- Changed in line with set criteria.

-

Streaming Data: Data integration systems employ data streaming to give data warehouses, lakes, and cloud platforms with data ready for analytics instead of loading data in batches. Data streaming moves data constantly, in real-time, from the source to the target.

- Processing.

- Delivering constantly in real-time.

- Providing instantaneous decisions and insights.

-

Integration of Applications Programming Interfaces: Integration of application programming interfaces (APIs) allows you to transfer data between several programs so the API may support the operations of the target application. Your human resources system might, for example, demand the same data as your financial system. In this situation, application integration would ensure that the data coming from the finance app complements what the HR system requires.

- Usually, the systems exchanging data utilize APIs of their own to send and receive data, which opens the path for applying SaaS automation solutions to simplify the integration.

-

Virtualization of Data: Data virtualization is a method of data management whereby one may access and modify data without storing a physical copy of it in a single repository.

- Data virtualization offers data in real-time, just like streaming does. The virtualization process gathers data from many systems so that an application or user may access it upon data requests. Virtualization fits systems that depend on high-performance query operations thus.

- Data virtualization also gives you the ability to rapidly and simply modify the data source or in line with changes in corporate logic.

Integration Process of Data

-

Get Needed Materials: As you compile data integration needs, your attention should be on the objectives your business wishes to achieve. You next choose the technological, human, and financial tools you will need to reach these objectives.

- For instance, a manufacturer might wish to compile information about several machines on the production floor. The company’s objective might be to better grasp the manufacturing rate of every machine as well as the maintenance needs so that decision-makers may select which machine to buy, each unit’s cost of ownership, and how each one helps the company’s bottom line. Linking the material ordering process to the general production rate, the company also wishes to build a more effective inventory management system using the manufacturing data from the machines.

- Every machine generates data right now via its operational program. Data architects must thus ensure that the data is in a format a centralized system can grasp and save if they are to unite it and make it valuable. This could need the following:

- An application for data cleansing and transformation.

- Individuals who know how to maximize this program’s efficacy.

- Using a data storage system that maintains data in the formats decision-makers and the inventory control system may leverage.

- Should the business not already have one, an enterprise resources planning (ERP) system capable of aggregating this data into many systems—including inventory control.

- Once these prerequisites have been set, the business is ready to delve into the specifics of the data they are seeking to combine.

-

Data Profile: Data profiling is the study of the source data in order to better grasp its quality, structure, and special traits. You also find possible difficulties during the phase of data profiling.

- You also make sure the necessary data satisfies your criteria. For instance, you might not be able to use sparse or inconsistent data you are seeking to merge to guide particular mission-critical decisions.

-

Construction: You create a blueprint your company uses for automated data integration throughout the design process. In this phase:

- Show how data will travel from its source to where it needs to be by means of data mapping.

- Choose from data cleaning, smoothing, or aggregation which kind of data transformation you require.

- Determine the connections among several kinds of data and the systems that would make use of them following the integration.

Your road map is the design process; consider it as your north star when your integration comes to pass. It also requires to be a quite adaptable document at the same time. This is so because as the integrations grow, stakeholders could find other uses for your data or new approaches of using it.

-

Use: Putting your design and profiling ideas into use marks the implementation stage. As will be covered later, this could call for Extract Transform Load (ETL), Extract, Load Transform (ELT), data streaming, virtualization, API integration, or other operations.

As you put your system into use, keep an eye on:

- Whether your data map makes the capabilities it was meant to allow possible.

- Your data transformation’s performance. Do you need to change your data or does it really present an efficient tool?

- The systems depending on this info. How are they gaining from the application? Furthermore, how does their better performance fit your more high-level objectives?

-

Examine, Confirm, and Track: Following your completion of your implementation, you must:

- Check that the data has been completely included into the systems required. You still could have silos to destroy.

- Verify the efficiency of your system by responding to queries including: Is the data accurate? Is it steady, or does it change too much to be of advantage? Does this need to be polished or does it have the kind of structure required?

- Track your implementation to observe changes with time. Monitoring could also include getting comments from employees on how the integration affects output throughout your company or makes their tasks simpler.

- You approach your data integration reflecting on, validating, and monitoring. This creates the chance to constantly modify your system such that, with time, it becomes ever more valuable.

These actions also help your system to be more flexible. Your company might decide, for instance, to develop a new good or service that would profit from another kind of integration. Alternatively, a new performance management system can call for changes to your data integration.

An Actual Case Study of Data Integration in Use :

One of the main building blocks in Starbucks’ explosive growth is data integration, which leverages its mobile app as a data collecting tool under the Starbucks Rewards Program. It then combines the data gathered via the app with systems meant for strategic decisions.

- For instance, Starbucks records in its rewards system the purchasing patterns of a single consumer, therefore gathering data. After that, the organization combines this information with its sales system to create tailored offers for particular clients.

- Starbucks’ digital infrastructure also consists in a cloud-based artificial intelligence engine that connects with its data. This is meant to be used data incorporated from the rewards app to suggest particular types of coffee, hot chocolate, and other beverages and treats to particular clients.

Strategic Data Integration for Companies

Data integration can be approached in numerous ways; the one you choose will rely on your resources, objectives, and style of strategy.

-

Handy Data Integration: Manual data integration is the human data collecting, loading, and transformation process. The person, or their team, then arranges the data in one central spot.

- Manual data integration sometimes relies on spreadsheets or scripts the individual produces as they combine the data they need to integrate.

-

Information Integration Middleware: Middleware integration allows you to leverage software or a platform acting as a process intermediate. The program helps data to be communicated as well as the ways several systems and applications interact.

-

Integration Based on Applications: Though it comes with preset integration tools, application-based integration also makes use of software, much like middleware integration does. The applications are meant to complement other software programs really nicely. One of the main difficulties of business process integration is lowering the number of manual processes; but, application-based integration eliminates most of the manual coding that usually slows down an integration project.

-

Homogeneous Access Integration: Using a single interface, uniform access integration constantly combines data from several sources. It offers a consistent perspective of all the data that various stakeholders can access and utilize as they so like.

-

Typical Integration with Storage: Usually combining data from several sources, a common storage integration system keeps it in a repository such a data warehouse or lake. Like a uniform access integration system, a common storage system is searchable, therefore facilitating user finding of the necessary information.

4 Fundamental Data Integration Applications

Although there are almost endless applications for data integration, these four are the most often used ones: data ingestion, replication, warehouse automation, and big data integration.

-

Data Capture: Data ingestion is the movement of data from several sources to a designated storage point, such a data lake or warehouse. One can consume batches or in real time. Usually, the data has to be standardized and cleaned so that a data analytics tool may use it as ready.

-

Replica of Data: Data copies and moves across systems—from a database in a data center to a cloud-based data warehouse—during data replication. Replication can occur in data centers/in the cloud and can be done in bulk, in batches, or in real-time. It helps to ensure important data gets backed up and synchronized with your company procedures.

-

Automation of Data Whales: Analytics-ready data is available sooner since the data warehouse automation process automates the lifetime of the data warehouse. Every phase of the data warehouse lifecycle—modeling, mapping, data mart integration, and governance—is automated so information is ready for usage by an application sooner.

-

Integrating Big Data: Big data applications’ massive volumes of both structured and unstructured data management calls for specific technologies. A successful big data integration gives your analytics tools a whole perspective of your business. This calls for smart pipelines able to automatically migrate, consolidate, and convert vast amounts of large data from many sources. A large data integration system must be scalable and able to profile and guarantee data quality as it flows in real-time if we are to achieve this.

Features of Data Integration

Thanks to certain advantages, data integration usually offers a good return on investment.

-

Accuracy and Trust: Error Reduction: Data integration gives you a consistent, dependable mechanism for delivering data to systems and decision-makers that demand it. Using automated solutions will help to lower human error rates, so producing more consistent data.

-

Data-driven, Cooperative Decision-making: Integrating data allows you to provide consumers with a whole view of data from several sources. This helps them to see the numbers required for more wise strategic judgments. Using the same pool of data makes it also simpler for people to cooperate and team up to extract insights as the data resides in a shared repository or system.

-

Effectiveness and Time-saving Strategies: Automating your data integration helps you to effectively gather, convert, save, and use one of your most valuable resources: information. This also releases staff, analysts, and others to concentrate on other value-adding tasks.

-

Simplified Corporate Intelligence: Equipped with data integration, a business intelligence system constantly provides reliable information from which to support expansion. When you include automation into the mix, stakeholders have more cognitive capacity to create successful plans rather than muddle through hand-crafted data collecting and processing chores. Within the framework of data engineering against data integration, business intelligence is where the two interact. Integration is used by data engineers to enable a simpler and more effective business insight deriving process.

Conclusion :

Application integration differs in that it only addresses the linking of software programs. An application integration solution could only be a link between two different kinds of software.

Since data integration manages the transport, transformation, and storage of the data in addition to creating a data link between applications. One usual use case is providing BI and analytics solutions.